OK, stop groaning over the title of this post. It’ll probably be the best pun you read until you flick over to a slightly wittier SQL blog.

I’ve recently been upgrading an application from SQL Server 2000 to SQL Server 2005, and analysing performance between the two. A common technique with this application is to create reporting stored procedures that have many different parameters, and allow the user to enter as few, or as many as they like. (And then the text strings get a ‘%’ attached at each end, and thrown to a LIKE operation.)

For example, consider the following stored procedure:

USE AdventureWorks2008 GO CREATE PROCEDURE dbo.TestOR @PersonType nchar(2) , @FirstName nvarchar(50) , @LastName nvarchar(50) AS SELECT * FROM Person.Person WHERE (PersonType = @PersonType OR @PersonType IS NULL) AND (FirstName = @FirstName OR @FirstName IS NULL) AND (LastName = @LastName OR @LastName IS NULL) GO EXEC dbo.TestOR @PersonType = 'EM', @FirstName = null, @LastName = null EXEC dbo.TestOR @PersonType = 'EM', @FirstName = 'Rob', @LastName = null EXEC dbo.TestOR @PersonType = null, @FirstName = null, @LastName = 'Caron' GO

You can see that the driving force here is the pattern (FirstName = @FirstName OR @FirstName IS NULL). This means that if you do not supply a value for @FirstName (or set it to NULL), then the second part of the OR will always return TRUE, and so all rows will be selected, cancelling out the need for first part.

This appears to be a very good method of creating a single stored procedure that can flexibly take many different parameters. It probably performed quite well in development too, until the amount of data increased.

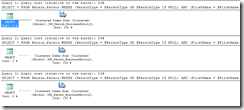

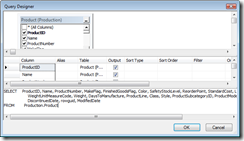

Let’s have a look at the execution plan:

Oh dear. Table scans. This example is only using a single table, but you can imagine what would happen if search parameters could be in multiple tables.

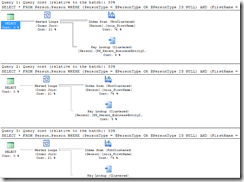

An additional problem with this method is that of parameter sniffing – if the query is initially run with a LastName only, then the execution plan will be optimised for a LastName – and this may not be appropriate for the next execution. This can be demonstrated by running “EXEC sp_recompile dbo.TestORâ€, and then running query #3, then #2, then #1. The execution plans are the same for all three, but the plan has changed, as a different index has been used:

There are a few ways to fix this. One is to create a different path for each combination of variables that are optional. However, this rapidly becomes unwieldy – for this example, we would need nine different paths, all with very similar code!

Another option is to move to dynamic SQL:

CREATE PROCEDURE dbo.TestDynamic @PersonType nchar(2) , @FirstName nvarchar(50) , @LastName nvarchar(50) AS DECLARE @sql nvarchar(max) DECLARE @params nvarchar(max) SET @sql = 'SELECT * FROM Person.Person WHERE (1=1) ' IF @PersonType IS NOT NULL SET @sql = @sql + ' AND PersonType = @PersonType ' IF @FirstName IS NOT NULL SET @sql = @sql + ' AND FirstName = @FirstName ' IF @LastName IS NOT NULL SET @sql = @sql + ' AND LastName = @LastName ' SET @params = '@PersonType nchar(2), @FirstName nvarchar(50), @LastName nvarchar(50)' EXEC sp_executesql @sql, @params, @PersonType = @PersonType , @FirstName = @FirstName, @LastName = @LastName GO

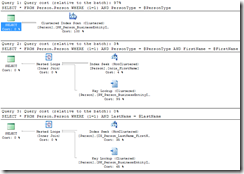

Let’s have a look at the new execution plans:

Much better! We now have three different execution plans, and three different SQL statements being executed.

There are a number of benefits to switching to dynamic SQL in this case:

- The query is simpler. This means that the query plans are likely to be more stable – there is less change of a bad plan being generated.

- Each combination of parameters will get its own execution plan, and this will be stored in the cache – in this case, we could have nine different plans.

- The code is easier to maintain. It’s a little harder to read, but you only have a single copy of the query – it’s just built up along the way.

- The users still get to have their flexible interface – very important when the upgrade is supposed to change as few things as possible, functionality-wise.