Cascading deletes are one of those features that people either love or hate. The haters prefer to delete all child rows first, and then delete the parent, while the lovers like the idea of deleting from one table and having every other table in the database empty out. I have to admit that I fall into both camps – I don’t use it too often, and most of the time I’ll opt for deleting the children first, even with the cascading delete turned on.

However, performance can suffer when you have a cascading delete, and as it’s more hidden than the two step Delete-Children-First method, it can be harder to realise how an index can increase performance.

Imagine that you have three tables: Employee, Equipment, and EquipmentLoans, where an employee can borrow equipment:

CREATE TABLE Employee ( EmployeeID int IDENTITY NOT NULL PRIMARY KEY , FirstName varchar(30) , LastName varchar(30) ) CREATE TABLE Equipment ( EquipmentID int IDENTITY NOT NULL PRIMARY KEY , Description varchar(30) ) CREATE TABLE EquipmentLoans ( EquipmentID int not null , EmployeeID int not null , DateDue datetime not null , CONSTRAINT fk_EquipLoans_EquimentID FOREIGN KEY (EquipmentID) REFERENCES Equipment(EquipmentID) ON DELETE CASCADE , CONSTRAINT fk_EquipLoans_EmployeeID FOREIGN KEY (EmployeeID) REFERENCES Employee(EmployeeID) ON DELETE CASCADE , PRIMARY KEY (EquipmentID, EmployeeID) ) INSERT INTO Equipment (Description) VALUES ('Laptop'); INSERT INTO Equipment (Description) VALUES ('Projector'); INSERT INTO Equipment (Description) VALUES ('Laser Pointer'); INSERT INTO Employee (FirstName, LastName) VALUES ('Scott', 'Wood'); INSERT INTO Employee (FirstName, LastName) VALUES ('John', 'Otto'); INSERT INTO Employee (FirstName, LastName) VALUES ('Bart', 'Johnson'); INSERT INTO EquipmentLoans VALUES (1,1, '2008-08-14') INSERT INTO EquipmentLoans VALUES (3,1, '2008-08-14') INSERT INTO EquipmentLoans VALUES (2,2, '2008-07-21')

Now we have a clustered index on Employee (EmployeeID), one on Equipment(EquipmentID), and one on EquipmentLoans(EquipmentID, EmployeeID). Because we’ve added cascading deletes on the EquipmentLoans table for both Employees and Equipment, we can automatically delete rows from EquipmentLoans when either the Employee or Equipment is deleted. Let’s have a look at how this works. If we run this command:

DELETE FROM Equipment WHERE EquipmentID = 3

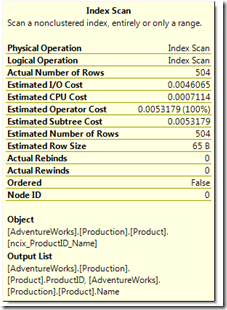

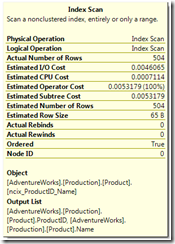

Then the clustered index of EquipmentLoans will be looked up to find any Equipment with an ID of 3 and delete that row. Next, it will look up the clustered index of Equipment and delete the row with an EquipmentID of 3. This is very fast, as it uses index lookups.

However, what if we delete an employee?

DELETE FROM Employee WHERE EmployeeID = 2

There is only the clustered index on EquipmentLoans, so to find any rows with EmployeeID = 2, the clustered index must be scanned. If there are a lot of rows, this could take a while. After this is done, the clustered index for Employees will be looked up, and the Employee with ID 2 will be deleted.

If there was no cascading delete, you would still need to perform two commands:

DELETE FROM EquipmentLoans WHERE EmployeeID = 2 DELETE FROM Employee HERE EmployeeID = 2

However, it’s easy to see which of these two commands is performing badly (i.e. the first one), and a nonclustered index on EquipmentLoans(EmployeeID) is an obvious fix. Once this index is added, it can be used to find all EquipmentLoans where EmployeeID = 2 and delete them – no more table scan. This applies whether it’s in the cascading delete or not.

Note that if you look at the execution plans for these queries, you will only see table scans. This is because these demo tables are all small enough to fit into a single 8 KB page. If there were thousands of rows, the indexes would come into play.