It’s finally happened. The table you created five years ago with an clustered integer IDENTITY column is about to hit 231 values (2,147,483,648). Five years ago, you were inexperienced with SQL Server, and had no idea that this table would grow this large – or that you’d be around to see it!

First, you reseed the IDENTITY value, back to -231.

DBCC CHECKIDENT (LargeTable, RESEED, -2147483647)

This should allow you to get another couple of years out of the table, and you will have enough time to implement a better table structure.

A flash of inspiration

A few hours later, you’re relaxing after work, quietly pleased about the bullet you’ve dodged today. A thought suddenly strikes you like a lightning bolt. You recall that inserts into a clustered index work best when inserting into an ever increasing value, at the end of the index. Can you have made a massive error in judgement in forcing all inserts to always happen just before identity value 1?

While you toss and turn about this all night, feel free to consider the issue before reading on. What do you think will happen? Will you have an enormous number of page splits are you constantly run into identity 1? This is obviously a frequently inserted table, given that it has had 2 billion rows added in the past five years (1.1 million per day).

Testing the hypothesis

Here’s a nice block of code to test the problem. We create a table with 1000 rows approximately 1000 bytes long, to fit 8 rows per page.

CREATE DATABASE IdentityTest GO USE IdentityTest GO -- Create a test table, just under 1000 bytes per row. We can fit 8 rows per page. CREATE TABLE T (i INT IDENTITY PRIMARY KEY, j char(960)) GO SET NOCOUNT ON -- Backup the database so we have a consistent log to examine ALTER DATABASE IdentityTest SET RECOVERY FULL BACKUP DATABASE IdentityTest TO DISK = 'C:\Work\IdentityTest_Full.bak' WITH INIT GO -- Seed the table with 1000 rows with identity ranges from 1-1000 INSERT INTO T (j) VALUES ('AAAAAAA') GO 1000 -- Reseed back to a large negative number DBCC CHECKIDENT (T, RESEED, -2147483647) GO -- Add another 100 rows with negative identities counting towards positive INSERT INTO T (j) VALUES ('BBBBBBB') GO 100 -- Examine the transaction log to see page split operations. -- Note the "CheckIdent" Transaction Name around row 1136 where we reseeded. SELECT [Transaction Name], * FROM fn_dblog(null, null) WHERE [Transaction Name] IS NOT NULL ORDER BY [Current LSN] GO -- Cleanup USE master GO DROP DATABASE IdentityTest GO

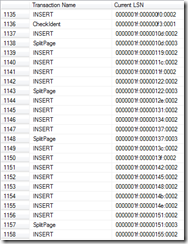

We can now clearly see the page split pattern in the transaction log. As expected, during inserts prior to the re-seeding, we have a pattern of 8 inserts before a Page Split occurs, signalling that no more rows will fit onto this page.

Analysis of the results

After we run the CheckIdent operation (row 1136), we can see that the next insert operation causes a page split, because we are trying to insert onto the first page of the clustered index. This already has 8 rows on it (IDs 1-8), so ID -2,147,483,646 won’t fit. The new page ends up with the new row, plus IDs 1, 2, and 3. This leaves room for four more rows to be inserted.

As expected, the fifth insert after the CheckIdent (row 1142) causes another page split. This time, the four negative ID rows are moved to one page, and rows 1,2,3 are on the other page.

So far, the pattern seems bad. We’ve had two splits after inserting five rows, and we get another split on the ninth insert (row 1147). In this case, however, we have not bumped up against the positive IDs. We currently have a single page holding the previous 8 negative ID rows, and the ninth won’t fit at the end, so a new page is allocated with this single row.

Now we’re into a stable state. Every 8 inserts, a new page is allocated, and the inserts never again touch the pages containing the first positive rows.

Conclusion

The results of this test are pretty clear – the page splitting algorithms will quickly re-stabilise after re-seeding. You’ll no longer be inserting into the end of the clustered index B-tree, but you will be inserting into a consistent point, which is separated from the start of the values, and so you should not see any performance degradation.

You were stressing for nothing. Relax!