The subject of T-SQL Tuesday #016, kindly hosted by Jes Borland (blog | twitter), is Aggregation. Although it’s a fine topic, I could not figure out what I could write about aggregation that would capture my interest, and more importantly, dear reader, yours.

The subject of T-SQL Tuesday #016, kindly hosted by Jes Borland (blog | twitter), is Aggregation. Although it’s a fine topic, I could not figure out what I could write about aggregation that would capture my interest, and more importantly, dear reader, yours.

I thought about the topic ceaselessly while doing dishes, feeding children, and cleaning up the house. I considered aggregate functions, and optional grouping parameters. I twisted the topic inside and out, trying to figure out an interesting angle. I considered telling a story about the Tuples vs the Aggregates on their way to Query Station (and spent an embarrassingly long time on that train of thought). Finally, I went out for a run, thought of a topic pretty quickly and started turning the idea over in my mind for the next 7 km.

Columnstore Indexes

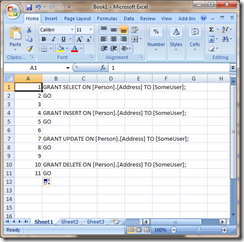

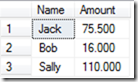

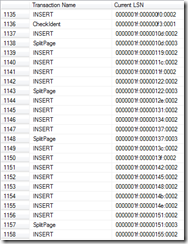

A columnstore index is a new feature of Denali, whereby an index is stored vertically – one column per page. Here is the whitepaper download – highly recommended, and only 5 pages of text. Imagine a row of a typical covering index (in this case, AdventureWork’s Person.Person table, and I’ve covered every column):

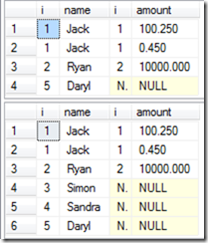

Every row stored in this particular index is stored on the same page. As each row is quite wide, only 5 rows will fit per 8 KB page.

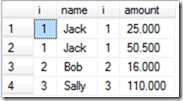

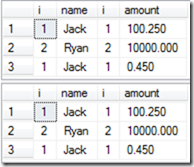

A columnstore index, however, stores each column on a separate set of pages. I am unsure of the ordering of the rows* but each page is compressed. As the column is likely to contain similar data, this means that each page can have excellent compression ratios – a factor of 4-15 is quoted in the whitepaper – and thousands of rows can fit on a single page.

* Due to a lack of internals documentation so far. Are they ordered by the column, or by the clustering key? I can see arguments for either way, but haven’t yet dived deep enough to decide one or the other.

This allows for fantastic performance gains when performing aggregates, as fewer pages need to be read to service the query, and only the columns actually required by the query need to be accessed.

Howerver, due to the fact that columnstore indexes are read only, they really only have applications in data warehouses.

Can we reproduce columnstore indexes in SQL Server 2008?

The question of whether it is possible to reproduce columnstore indexes in SQL Server 2008 popped into my mind, and the short answer is “No, not a chanceâ€. Sorry.

Let’s look at the properties of a columnstore index.

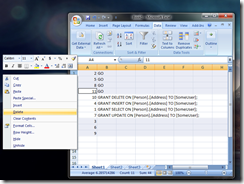

Read Only – SQL Server 2008 can certainly build index structures on read-only data. And, as it’s read only, separate aggregation steps can be created in 2008 to summarise data ahead of time. However, one of columnstore’s selling points is to remove the need to aggregate. Columnstore gives you a massive performance benefit with little effort.

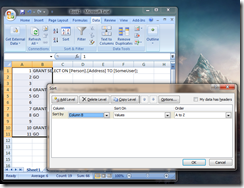

Highly Compressed – SQL Server 2008 can compress indexes. However, to get highly compressible indexes, similar to columnstore’s you’ll only want a single column, which makes the index less useful, and forces you to join frequently.

Only select required columns – A query accessing a columnstore index will only access those columns that are needed by the query. To get the same sort of functionality in 2008, you would need to either create a covering index for each query you want (nothing like columnstore!), or join multiple indexes together (resulting in a large amount of logical reads).

Different table structure – Denali is able to use a completely different page structure, particularly as this data is read-only. This is not possible in SQL Server 2008, and still be usable with T-SQL.

No code changes – Denali’s implementation allows us to create a single columnstore index on all columns in the table, and we don’t need to modify any queries to use these columnstore indexes. It’s completely seamless.

I tried to create an example where we could create similar compressed indexes in 2008, but I was never able to merge multiple indexes together to query the data efficiently. Denali has specific optimisations available for columnstore indexes, and these cannot be reproduced. About the best we can do is to create a read-only compressed covering index, which is nowhere near impressive these days.

After a few hours considering this, my conclusion is that you are better off optimising your data warehouse for those queries that you know are going to come, and ad-hoc queries will need to continue to run slowly, at least until you’ve upgraded to Denali. Frequently run queries will benefit from extra customisation (pre-aggregation, specialised indexes with compression), but there no chance of a similar payoff inside the database engine.

Not too long to wait now, and you’ll be able to take advantage of columnstore indexes!