Welcome to T-SQL Tuesday for April 12, 2011. This month is generously hosted by Matt Velic (Blog | Twitter), who poses the topic of APPLY, one of the many excellent additions to the T-SQL language in SQL Server 2005.

Welcome to T-SQL Tuesday for April 12, 2011. This month is generously hosted by Matt Velic (Blog | Twitter), who poses the topic of APPLY, one of the many excellent additions to the T-SQL language in SQL Server 2005.

If you read other T-SQL Tuesday posts from today, you’ll get some excellent tips on how to use APPLY, and a list of the excellent things you can do with it. I’m going to go in a slightly different direction, and examine what your options are when using APPLY with database compatibility level 80.

Books Online states that the database must be at least compatibility level 90 to use APPLY.

It turns out that you can create a query that uses APPLY, and have it run in compatibility level 80. Consider the following setup:

CREATE DATABASE Compat80

GO

ALTER DATABASE Compat80 SET COMPATIBILITY_LEVEL=80

GO

USE Compat80

GO

SET NOCOUNT ON

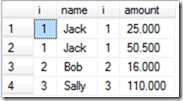

CREATE TABLE A (i int, name varchar(20))

CREATE TABLE B (i int, amount decimal(15,3))

INSERT INTO A (i, name) VALUES (1, 'Jack')

INSERT INTO A (i, name) VALUES (2, 'Bob')

INSERT INTO A (i, name) VALUES (3, 'Sally')

INSERT INTO B (i, amount) VALUES (1, 25.0)

INSERT INTO B (i, amount) VALUES (1, 50.5)

INSERT INTO B (i, amount) VALUES (2, 16.0)

INSERT INTO B (i, amount) VALUES (3, 110.0)

GO

Can we use an APPLY in Compatibility 80?

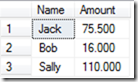

We’ve created a database in compatibility level 80, and created two tables, A and B. Let’s try a query with CROSS APPLY:

SELECT A.Name, dv.Amount FROM A

CROSS APPLY (SELECT i, SUM(Amount) Amount

FROM B WHERE B.i = A.i GROUP BY i) dv

There’s not much to say here, except that I proved Books Online wrong – I can do a CROSS APPLY in Compatibility 80. I don’t know the exact reason why I can do this, but it’s likely to be the simplistic nature of the query (which makes it less interesting). I’ll do a quick little victory dance, and we’ll move on.

A more useful use of APPLY

Where APPLY really shines is when functions are used. Let’s go ahead and create a simple function to test with:

CREATE FUNCTION dbo.fn_getB(@i AS int)

RETURNS @result TABLE

(

i int NOT NULL

, amount decimal(15,3)

)

AS BEGIN

INSERT INTO @result

SELECT i, amount FROM B WHERE i = @i

RETURN

END

GO

The logic for this function is slightly different than the previous, but it only serves to make it more interesting. Let’s go ahead and use this function in an APPLY, remembering that we’re still in Compatibility 80:

SELECT * FROM A

CROSS APPLY dbo.fn_getB(A.i) dv

Msg 102, Level 15, State 1, Line 2

Incorrect syntax near ‘.’.

That’s disappointing, and rather cryptic. Let’s try again after switching to Compatibility 90:

ALTER DATABASE Compat80 SET COMPATIBILITY_LEVEL=90

GO

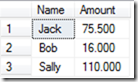

SELECT * FROM A

CROSS APPLY dbo.fn_getB(A.i) dv

GO

Success!

Getting around this restriction

We’ve seen that APPLY using functions is a handy tool, but can we still use it in databases that are in Compatibility 80? The following is a workaround, but it’s a bit of a hack. I just like thinking outside the box.

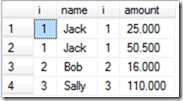

The limitation here is that we cannot let a Compatibility 80 database execute the query. We can, however, run the query from within a different database, such as Master (ignoring permission issues) by using sp_executesql.

EXEC sp_executesql N'USE Master;

SELECT * FROM Compat80.dbo.A

CROSS APPLY Compat80.dbo.fn_getB(A.i) dv '

GO

The main reason I’d want to use APPLY is to use the DMFs with the DMVs provided by SQL Server 2005, such as sys.dm_exec_*. However, these DMVs typically supply a database_id column, meaning you can run the query from within Master anyway. With that in mind, I can’t think of any real benefit of this technique – it’s almost always a better option to simply upgrade your databases to compatibility 90 or above – we are in 2011, after all.

Thanks again for this month’s edition of T-SQL Tuesday, Matt!