SQL Server provides two primary ways of communicating data to the client – Result Sets and Messages. Typically, a client application will respond to Result Sets, and any error messages that are raised by SQL Server with a severity higher than 10. For error messages with a severity of 10 or less, the .NET event SQLConnection.InfoMessasge can be used to return information during query processing.

In Management Studio, the difference between a Message and and Error is that the Error is flagged in red on the Messages result panel and may trigger rollbacks or break connections, depending on the severity of the error.

PRINT

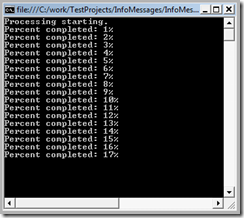

One use of communicating data back to the client is for stored procedures to let the user know where they are up to. While this could be used for production code, it is usually used as a poor man’s debugger. By sprinkling PRINT “Currently at point x†statements through your stored procedure, you can get an inkling of where the processing is up to.

However, PRINT has a noticeable drawback – the results are not returned immediately. Instead, anything sent to PRINT will be buffered, and not released until the buffer is full, or the query completes. This buffer is around 8KB in size.

“No problem!†I hear you cry. “I’ll just pad my PRINT message out to be 8KB!†Nice try, but unfortunately, the PRINT statement will trim to varchar(8000) or nvarchar(4000), which isn’t enough. For example:

PRINT 'A' + REPLICATE(' ', 8000)

PRINT 'B' + REPLICATE(' ', 124)

WAITFOR DELAY '00:00:05'

PRINT 'C'

In this example, we’re using REPLICATE to try to pad out the PRINT’s message, but we need two PRINT statements to get anything back immediately. By running the example, and flicking to the Messages screen in Management Studio, you can see if A is being returned before or after the WAITFOR DELAY statement. In my tests, the 124 on the B line is not a static value – it was 134 for a different server.

So, two PRINT messages does not really seem like an acceptable solution.

RAISERROR

Enter RAISERROR. While the RAISERROR syntax is slightly more complicated, it’s also a lot more powerful (although the misspelling is quite annoying).

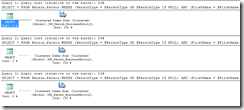

RAISERROR ('Message', 0, 1, ..., ...) WITH NOWAIT

The first parameter is simply a textual description of the error/message. Next (0) is the Severity level. If this value is 10 or less, it will be counted as a Message, and not as an Error. The 1 indicates the State of the message – for a message, you’ll generally keep this at 1. After the State, you can list multiple parameters that will be inserted into the first parameter – more on this shortly.

Example 1 shows two methods of RAISERROR, one where the text of the message is stored in a variable, and one where it is included in the RAISERROR command. This simply returns “Currently at position 56†in both instances. Note the WITH NOWAIT. This tells SQL Server to send the message back to the client immediately, effectively avoiding the problems PRINT has.

-- Example 1

DECLARE @msg nvarchar(200) = 'Currently at position %d.'

RAISERROR (@msg, 0, 1, 56) WITH NOWAIT

RAISERROR ('Currently at position %d.', 0, 1, 56) WITH NOWAIT

Note that the equivalent PRINT statement would be:

PRINT 'Currently at position ' + CONVERT(varchar(10), 124) + '.'

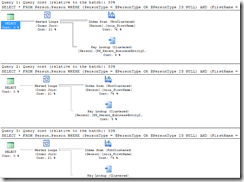

Example 2 shows how easy it is to output a text value. This is useful for displaying the current value of the a loop.

-- Example 2

DECLARE @somevalue varchar(200) = 'Melbourne'

DECLARE @msg nvarchar(200) = '@somevalue is currently %s.'

RAISERROR (@msg, 0, 1, @somevalue) WITH NOWAIT

Finally, Example 3 shows how you can combine multiple values in your output.

-- Example 3

DECLARE @somevalue varchar(200) = 'Melbourne'

DECLARE @msg nvarchar(200) = '@somevalue is currently "%s" at position %d.'

RAISERROR (@msg, 0, 1, @somevalue, 124) WITH NOWAIT

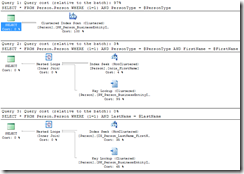

Monitoring

Another benefit of RAISERROR over PRINT is that it is much easier to trace RAISERROR in Profiler. Simply capture “User Error Message†events for Error 50000, and you’ll get the messages. Of course, you can always filter on the severity or the SPID, or any other filter that is appropriate.

So, there you have it! RAISERROR is a much more sophisticated method of returning status messages to the client that using PRINT.